Supervised Audio Denoising

What is a Supervised Model?

A supervised model is a type of machine learning algorithm that learns to map inputs to known outputs using labelled data. The context of audio denoising consists of noisy audio as input and clean audio as target. The model learns to predict the clean waveform from the noisy input by minimizing the difference (loss) between its output and the true clean audio. Supervised learning requires paired datasets — here, we provide noisy/clean audio pairs for training.

How this simple model works

I implemented a lightweight 1D convolutional neural network to demonstrate supervised denoising. Here’s the core workflow:

- Dataset preparation: Audio files are loaded from clean and noisy folders, then converted to mono and normalized for stable training. Short slices of audio (e.g., 1 second) are used to speed up training and fit into memory.

- Time Embedding: A small sinusoidal embedding is applied per sample to condition the network. This allows the model to adapt slightly to varying temporal characteristics in the audio.

- 1D Convolutional Network: The model uses three 1D convolutional layers (adding time embedding, processing features, outputting denoised waveform). Activations use ReLU for non-linearity. The architecture is simple but effective for demonstration purposes.

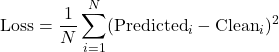

- Training: Mean Squared Error (MSE) is used as the loss function. The network is trained on small audio segments in batches. Random time embeddings per batch encourage flexible learning.

(1)

- Inference: For full-length audio, the signal is processed in chunks, and outputs are concatenated. Optionally, one could apply overlap-add smoothing to avoid small discontinuities at chunk boundaries. The result is a denoised waveform and an estimated noise signal, both saved for inspection.

Despite its simplicity, this model performs efficiently on stationary and moderate noise. It demonstrates that even a lightweight 1D ConvNet can learn to clean audio in a supervised setting.

As a next step, I plan to extend the model with skip connections or multi-scale convolutions to improve quality. Additionally, exploring spectral-domain losses (e.g., STFT loss) could yield perceptually better denoising results.

Dataset used:

University of Edinburgh DataShare – VoiceBank-DEMAND corpus

(I used smaller clean and noisy test subsets for my experiments.)

If you’d like to support my audio content, you can find me here:

https://buymeacoffee.com/hakanyurdakul

import os

import math

import torch

import torch.nn as nn

import torch.optim as optim

import torchaudio

import matplotlib.pyplot as plt

from torch.utils.data import Dataset, DataLoader

# --- Sinusoidal time embedding ---

def sinusoidal_embedding(t, dim=32):

device = t.device

half_dim = dim // 2

emb = torch.exp(torch.arange(half_dim, device=device) * -(math.log(10000) / half_dim))

emb = t[:, None] * emb[None, :]

emb = torch.cat((emb.sin(), emb.cos()), dim=-1)

return emb

# --- 1D ConvNet with time embedding ---

class U1DNet(nn.Module):

def __init__(self, time_emb_dim=32):

super().__init__()

self.time_mlp = nn.Sequential(

nn.Linear(time_emb_dim, 32),

nn.ReLU(),

nn.Linear(32, 32)

)

self.conv1 = nn.Conv1d(1, 32, 9, padding=4)

self.conv2 = nn.Conv1d(32, 32, 9, padding=4)

self.conv3 = nn.Conv1d(32, 1, 9, padding=4)

def forward(self, x, t):

t_emb = sinusoidal_embedding(t, dim=32)

t_emb = self.time_mlp(t_emb).unsqueeze(-1) # [B,32,1]

x = self.conv1(x)

x = x + t_emb

x = torch.relu(x)

x = torch.relu(self.conv2(x))

x = self.conv3(x)

return x

# --- Dataset ---

class AudioDenoiseDataset(Dataset):

def __init__(self, clean_dir, noisy_dir, sr=16000, duration=None):

self.clean_dir = clean_dir

self.noisy_dir = noisy_dir

self.files = sorted(os.listdir(clean_dir))

self.sr = sr

self.duration = duration

def __len__(self):

return len(self.files)

def __getitem__(self, idx):

clean_path = os.path.join(self.clean_dir, self.files[idx])

noisy_path = os.path.join(self.noisy_dir, self.files[idx])

clean, _ = torchaudio.load(clean_path)

noisy, _ = torchaudio.load(noisy_path)

clean = clean.mean(dim=0, keepdim=True)

noisy = noisy.mean(dim=0, keepdim=True)

if self.duration:

num_samples = int(self.sr * self.duration)

clean = clean[:, :num_samples]

noisy = noisy[:, :num_samples]

clean = clean / clean.abs().max()

noisy = noisy / noisy.abs().max()

return noisy, clean

# --- Supervised denoising loss ---

def supervised_denoising_loss(model, x_noisy, x_clean, t):

pred = model(x_noisy, t)

return ((pred - x_clean) ** 2).mean()

# --- Parameters ---

device = "cuda" if torch.cuda.is_available() else "cpu"

sr = 16000

train_duration = 1 # seconds for training slices

batch_size = 4

num_epochs = 10

learning_rate = 1e-3

# --- Dataset and DataLoader ---

dataset = AudioDenoiseDataset('cleanTrainset16k',

'noisyTrainset16k',

sr=sr, duration=train_duration)

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

# --- Initialize model and optimizer ---

model = U1DNet(time_emb_dim=32).to(device)

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

# --- Training loop ---

for epoch in range(num_epochs):

for x_noisy, x_clean in dataloader:

x_noisy = x_noisy.to(device)

x_clean = x_clean.to(device)

t = torch.rand(x_noisy.size(0), device=device)

loss = supervised_denoising_loss(model, x_noisy, x_clean, t)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1}/{num_epochs}: loss={loss.item():.4f}")

# --- Save trained model ---

torch.save(model.state_dict(), "denoiser.pth")

# --- Inference on full-length audio ---

def denoise_full_audio(model, noisy_waveform, sr=16000, chunk_duration=1):

model.eval()

num_samples = noisy_waveform.size(1)

chunk_size = int(sr * chunk_duration)

output = []

with torch.no_grad():

for start in range(0, num_samples, chunk_size):

end = min(start + chunk_size, num_samples)

chunk = noisy_waveform[:, start:end]

# If last chunk shorter than chunk_size, pad

if chunk.size(1) < chunk_size:

pad_len = chunk_size - chunk.size(1)

chunk = torch.nn.functional.pad(chunk, (0, pad_len))

chunk = chunk.unsqueeze(0).to(device)

t = torch.rand(1, device=device)

denoised_chunk = model(chunk, t)

# Remove padding if added

denoised_chunk = denoised_chunk[:, :, :end-start]

output.append(denoised_chunk.cpu())

return torch.cat(output, dim=2).squeeze()

# Load test noisy audio (full-length)

test_noisy_path = 'yourTestMonoAudioFile.wav'

x_noisy_test, _ = torchaudio.load(test_noisy_path)

x_noisy_test = x_noisy_test.mean(dim=0, keepdim=True)

x_noisy_test = x_noisy_test / x_noisy_test.abs().max()

# Denoise full audio

denoised_waveform = denoise_full_audio(model, x_noisy_test, sr=sr, chunk_duration=train_duration)

# Save denoised audio

torchaudio.save("denoised_output.wav", denoised_waveform.unsqueeze(0), sr)

# Save estimated noise

estimated_noise = x_noisy_test - denoised_waveform.unsqueeze(0)

torchaudio.save("noise_output.wav", estimated_noise, sr)

# --- Plot comparison ---

plt.figure(figsize=(10, 9))

# Noisy input

plt.subplot(3, 1, 1)

plt.plot(x_noisy_test.squeeze().cpu().numpy(), color='r')

plt.title("Noisy Input")

plt.xlabel("Sample Index")

plt.ylabel("Amplitude")

# Denoised output

plt.subplot(3, 1, 2)

plt.plot(denoised_waveform.numpy(), color='b')

plt.title("Denoised Output")

plt.xlabel("Sample Index")

plt.ylabel("Amplitude")

# Estimated noise

plt.subplot(3, 1, 3)

plt.plot(estimated_noise.squeeze().cpu().numpy(), color='g')

plt.title("Estimated Noise")

plt.xlabel("Sample Index")

plt.ylabel("Amplitude")

plt.tight_layout()

plt.show()

[1] State-of-the-Art in 1D Convolutional Neural Networks: A Survey

[2] A Fully Convolutional Neural Network for Speech Enhancement

[3] A Review of Deep Learning Techniques for Speech Processing

[4] University of Edinburgh DataShare – VoiceBank-DEMAND corpus